- Home

-

About

- About

- Project Overview

- News & Publications

- News

- Resources

The purpose of this document is to define the process by which extensions to the NWB core standard are proposed, evaluated, reviewed, and accepted. The goal is to facilitate community engagement while ensuring quality and utility of the NWB data format.

Data standards are not static but evolve to adapt to changing requirements, clarify ambiguities, and fix bugs among others. The typical life-cycle of format extensions is outlined below.

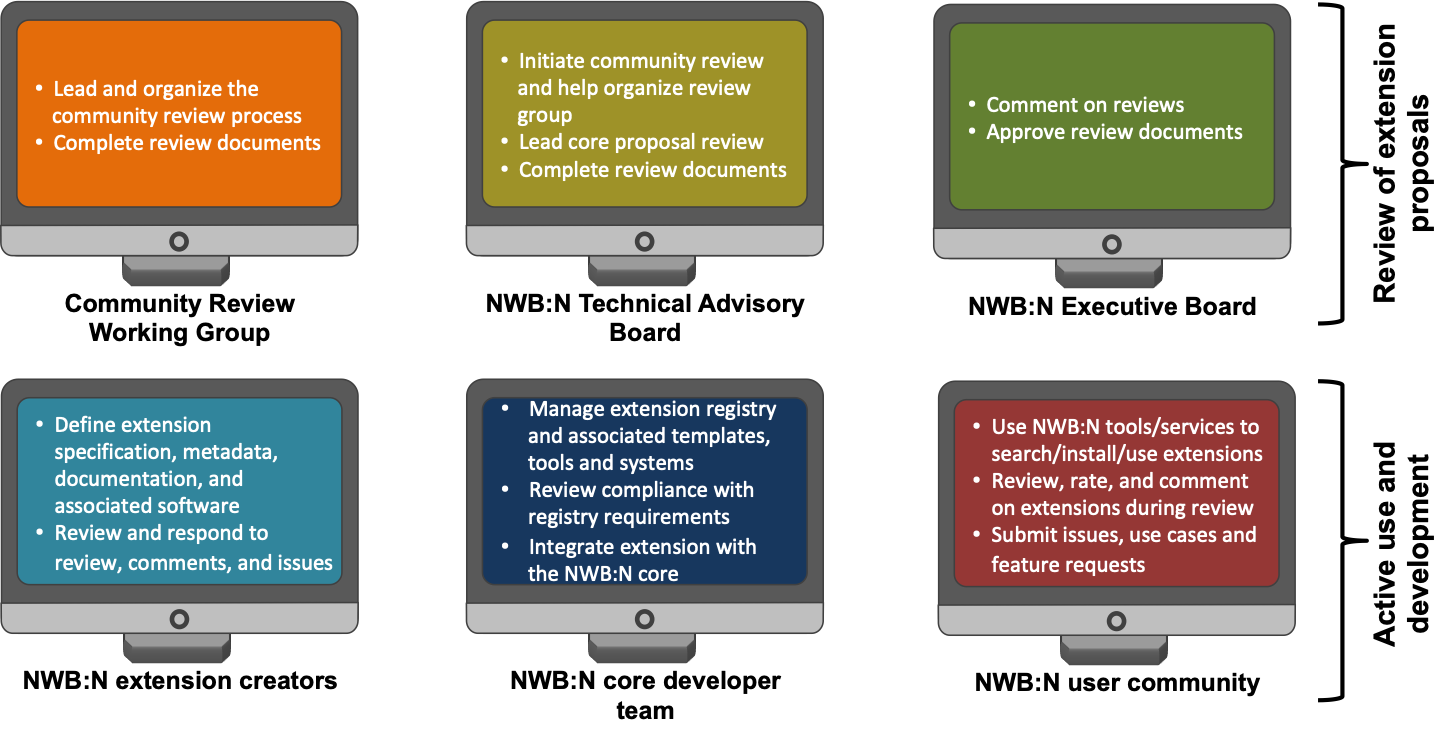

During active use of an extension, the main stakeholders of the extension include the developers of the extension, the NWB core development team, and the end-users using the extension. Review of extensions for acceptance to the NWB core data standard are led by the NWB Technical Advisory Board (TAB), the NWB Executive Board (EB), and the community review working group with comments, requests for changes, and updates provided by the other stakeholders (i.e., the extension developers, users, and the core developer team).

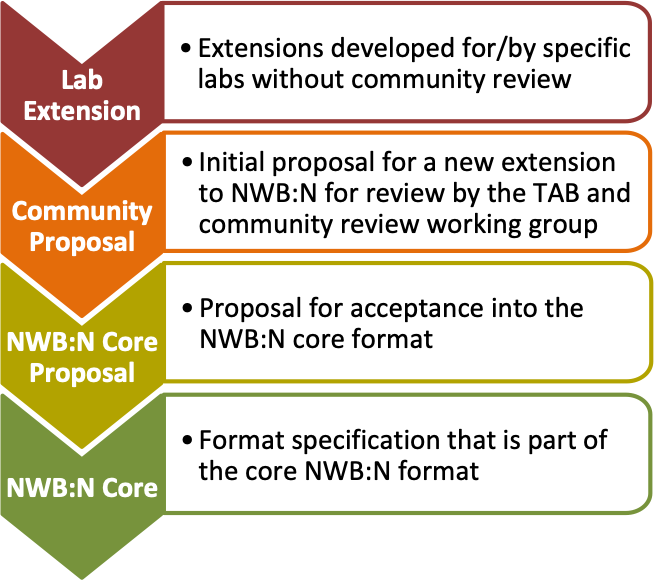

To guide and clearly communicate progress in the review and acceptance process, we use a multi-phased process for acceptance. As “Lab Extensions” have not entered the review process yet, they MAY either be internal or public NDX. NDX in review (i.e., NDX in any other phase than lab extension) MUST be public NDX.

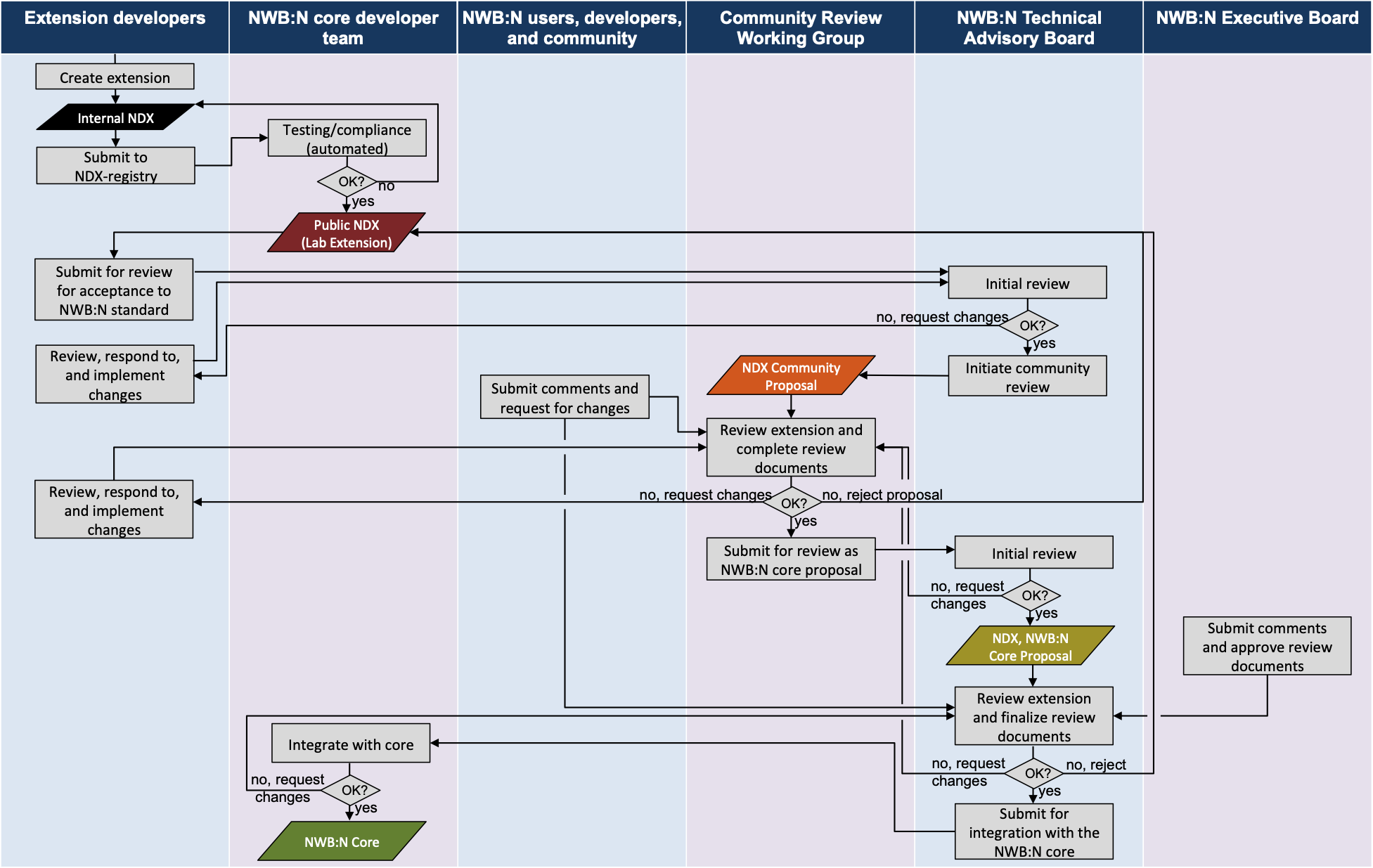

The flow-chart above illustrates the main process for creation, review, and acceptance of extensions as part of the NWB core data standard. The process begins with a development team creating a new extension. During initial development, this will often be an internal NDX. Once a working draft of the extension exists, the team will submit the extension to the NDX Catalog by creating a pull request on the staged-extensions repository. The NWB core development team tests compliance of the extension with NWB standards (typically via automated tests). Once accepted to the NDX Catalog, the extension becomes a public NDX with “Lab Extension” status.

To initiate the review of the extension for integration with the NWB core, the creators/owners of the extension submit the extension for review to the NWB Technical Advisory Board (TAB). A proposal for creating a Community Proposal may be submitted to the TAB at any time and MUST contain a public NDX and SHOULD include basic drafts of the additional documents required for review. One (or more) members of the TAB will conduct an initial review of the proposal. At this point, the TAB may: 1) request changes to the proposal, or 2) accept the proposal for review. If the proposal is accepted for review, the TAB will initiate the community review process and create a working group that is responsible for leading the community review. The working group may consist of members of the TAB, EB, developers, users, and experts from the community. The developers of the extension MAY contribute to the working group to facilitate the review, but MUST NOT lead the review and decision-making process.

At this point, the NDX is now a Community Proposal. The goal of the Community Proposal review phase is to complete the base technical review and associated review documents and to provide the community the opportunity to contribute to the review. All main review documents MUST be public via the NDX-custom-record repository to ensure the community can submit comments during the review. Comments and input are typically submitted directly as part of discussions of the working group or via GitHub issues and PRs on the NDX-custom-record repo (and NDX-custom repo if appropriate).

As a result of the community review phase, the working group may: 1) request changes to the NDX to address issues identified in the review, 2) reject the proposal (so that the NDX would remain a regular lab extension), or 3) submit the proposal to the TAB for acceptance.

If the NDX is accepted by the TAB, the working group may submit a proposal for creating an NWB Core Proposal. This proposal MUST contain a public NDX and MUST include the completed review documents from the Community Proposal review. One (or more) members of the TAB will conduct an initial review of the proposal and may: 1) request changes, or 2) accept the proposal for review as an NWB Core Proposal. If accepted for review, the extension is now an official “NWB Core Proposal.”

The “NWB Core Proposal” review phase is targeted at review of the technical merits and compliance with NWB standards and metrics. Depending on the results of this review, the TAB may decide to either: 1) reject the proposal (so that the NDX would remain a regular lab extension), 2) submit a request for changes to the working group (while, depending on the changes requested, either maintaining the core proposal status or changing status again to Community Proposal), or 3) accept the proposal and request integration with the NWB core. If deemed necessary, the TAB may also submit the proposal for approval to the NWB Executive Board (EB). The NWB community (including all users, developers, members of the NWN:N EB, TAB, and the broader community) may submit comments and requests for changes throughout the proposal review process. If accepted, the TAB will submit a request for integration of the extension to the NWB developer team. This is typically done by creating an issue on the corresponding repositories for the NWB core specification and APIs (e.g, PyNWB, MatNWB, nwb-schema, etc.).

If the integration is successful, the extension becomes officially part of the NWB core standard. If issues are identified as part of the integration process with the core, the developer team may submit requests for changes by creating corresponding issues on the NDX-custom-record repository. The additional changes must be reviewed and approved by the TAB and the review documents updated accordingly.

To support the formal review and evaluation of extensions to be integrated into the NWB core, we will develop a set of format review documents, questionnaires, and a rating system to enable the community and working groups to evaluate critical quality metrics of extensions in a formal and reproducible manner. This will help guide the review process and help ensure compliance of extensions with key requirements of the NWB data standard. Some relevant quality metrics and standards include:

The above list is a collection of the kinds of questions developers and users will commonly ask. The list is not exhaustive and should be expanded to cover points relevant to the specific extension proposal. For points not relevant to the specific proposal ideally indicate that they are not applicable (and why). The list is intended to provide a helpful template and guideline for reviewing extensions, rather than a strict form.

The public review of NDX proposals for integration with the NWB core is facilitated by the NDX Catalog and the corresponding ndx-custom-record repository. As part of the review process, the reviewers will create and maintain review documents (in RST format) as part of a /review folder of the corresponding ndx-custom-record repository. Comments and requests for changes can in this way be submitted and processed via GitHub issues and pull requests on the ndx-custom-record repository. Requests for changes created as part of the review process can then be submitted to the developer team via issues on the corresponding ndx-custom repository (or email if no issue tracker is available for ndx-custom). The NDX Catalog further facilitates the review process by enabling reviewers to search and identify related extensions. For internal discussion, the review teams may also decide to use other mechanisms (e.g., Google Docs) to prepare review documents and track discussions; however, the main review documents MUST be made public via the ndx-custom-record repository so that they are available for public review.